- CONDA INSTALL PACKAGE INTO A SPECIFIC ENVIRONMENT HOW TO

- CONDA INSTALL PACKAGE INTO A SPECIFIC ENVIRONMENT CODE

Next, activate the virtualenv: source env_1/bin/activate # activate virtualenvĪfter that you can run PySpark in local mode, where it will run under virtual environment env_1. You should specify the python version, in case you have multiple versions installed.

We use the following command to create and set up env_1 in the local environment: virtualenv env_1 -p /usr/local/bin/python3 # create virtual environment env_1įolder env_1 will be created under the current working directory. We highly recommend that you create an isolated virtual environment locally first, so that the move to a distributed virtualenv will be more smooth. Sc.parallelize(range(1,10)).map(lambda x : np._version_).collect() Using virtualenv in the Local Environmentįirst we will create a virtual environment in the local environment.

CONDA INSTALL PACKAGE INTO A SPECIFIC ENVIRONMENT CODE

We save the code in a file named spark_virtualenv.py.įrom pyspark import SparkContext if _name_ = "_main_": This piece of code uses numpy in each map function. In this example we will use the following piece of code. Batch modeįor batch mode, I will follow the pattern of first developing the example in a local environment, and then moving it to a distributed environment, so that you can follow the same pattern for your development. In HDP 2.6 we support batch mode, but this post also includes a preview of interactive mode.

CONDA INSTALL PACKAGE INTO A SPECIFIC ENVIRONMENT HOW TO

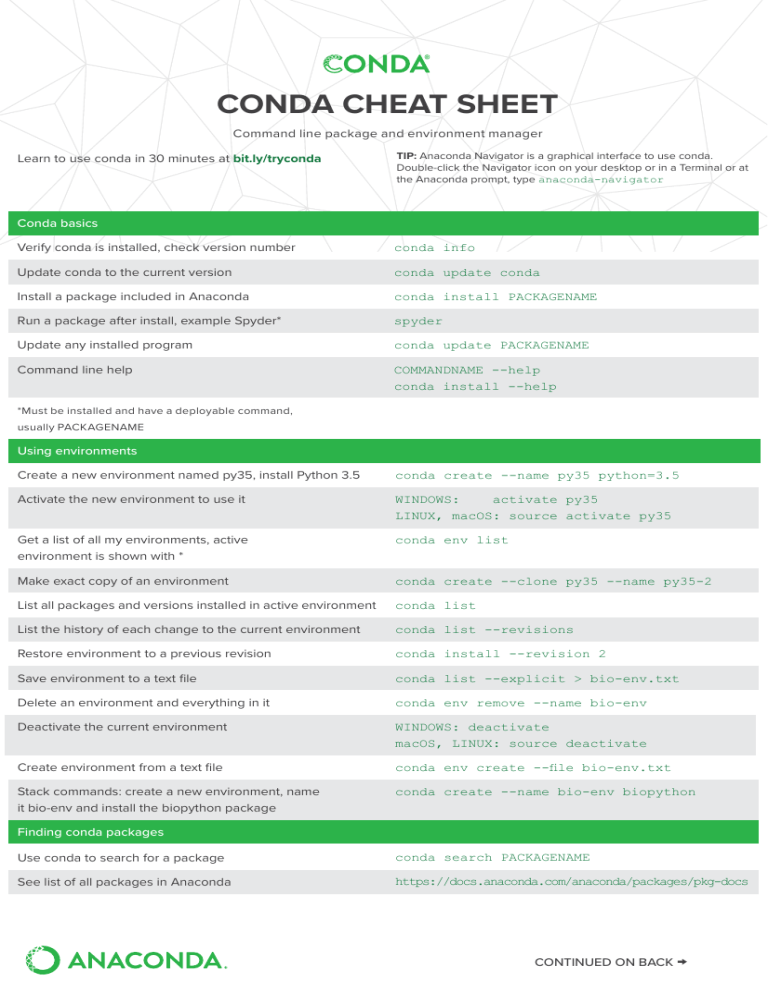

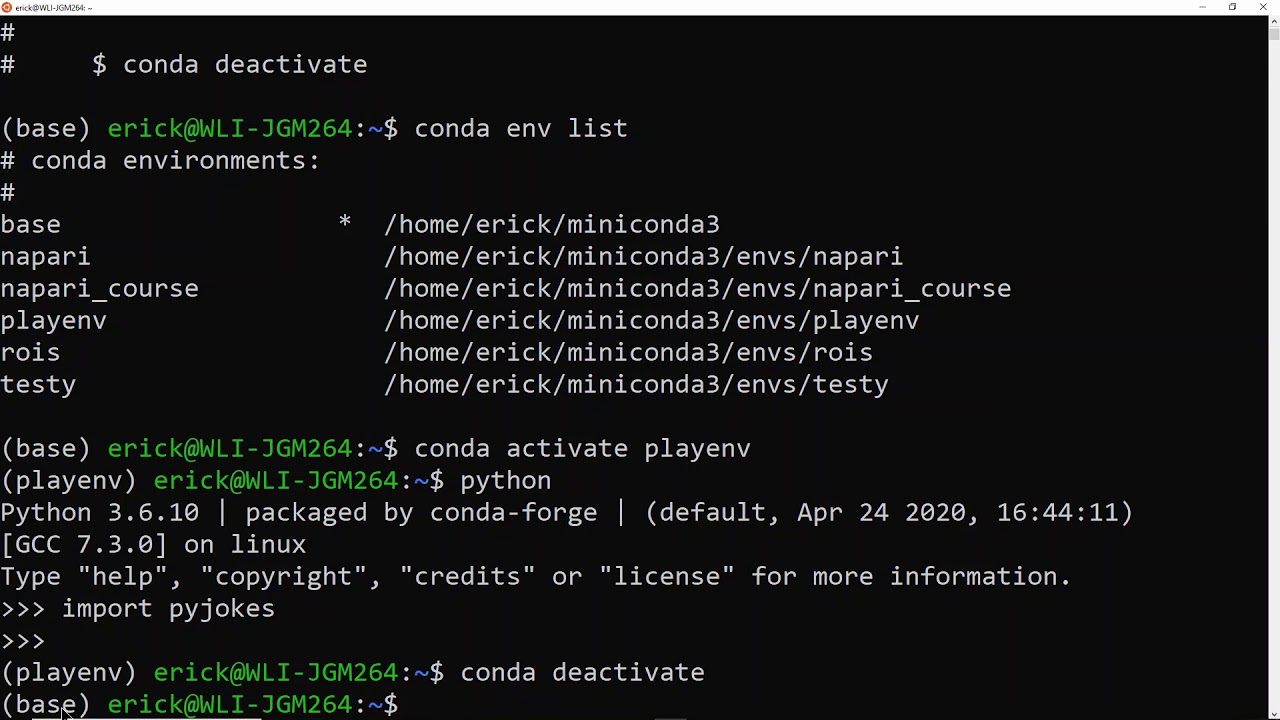

Now I will talk about how to set up a virtual environment in PySpark, using virtualenv and conda.

Python 2.7 or Python 3.x must be installed (pip is also installed).Each node must have internet access (for downloading packages).Note that pip is required to run virtualenv for pip installation instructions, see. Either virtualenv or conda should be installed in the same location on all nodes across the cluster. All nodes must have either virtualenv or conda installed, depending on which virtual environment tool you choose. Hortonworks supports two approaches for setting up a virtual environment: virtualenv and conda.(This feature is currently only supported in yarn mode.) Prerequisites In this article, I will talk about how to use virtual environment in PySpark. This eases the transition from local environment to distributed environment with PySpark. We recently enabled virtual environments for PySpark in distributed environments. For such scenarios with large PySpark applications, `-py-files` is inconvenient.įortunately, in the Python world you can create a virtual environment as an isolated Python runtime environment. And, there are times when you might want to run different versions of python for different applications. Sometimes a large application needs a Python package that has C code to compile before installation. A large PySpark application will have many dependencies, possibly including transitive dependencies. Type "help", "copyright", "credits" or "license" for more information.For a simple PySpark application, you can use `-py-files` to specify its dependencies. The following packages will be downloaded: How can I fix this? NAACL2018]$ conda install pytorch torchvision -c pytorchĮnvironment location: /scratch/sjn/anaconda I am trying to install torch using conda however, it installs it into another conda environment not the one I am interested in.

0 kommentar(er)

0 kommentar(er)